Reblogged from the Bowdoin News: “Workshop Gives Faculty the Keys to a Digital World”

Nearly two dozen Bowdoin faculty members are taking a turn as students in a four-day course for faculty titled “Digital Humanities @Bowdoin,” taught January 13-16 as part of the College’s new Digital and Computational Studies Initiative.

It was the first day of class, and five rows of students were seated expectantly – some a little nervously – in a Searles computer lab. “In the next half hour I’m going to teach you everything I know about computers,” said Professor of Computer Science Eric Chown to his audience – which consisted not of undergrads but of nearly two dozen Bowdoin faculty members, representing disciplines such as Romance languages, film studies, art, chemistry, English, history, German, Russian, environmental studies, and math.

Although Chown may have been exaggerating just a little bit for effect, it’s no stretch to say that in today’s increasingly digital world, understanding even the basics of computer science can make a world of difference for scholars and teachers in any field. “Computers are good at things we’re not good at: reading 10,000 books at once, or counting the number of pixels in an image that are more red than green,” Chown said. “They’re fantastic at these things, and these things lead us to think a little bit differently about what we’re studying.”

How do computer programs simplify a complex world into zeros and ones, and how do simple components interact to perform highly complex tasks? What kinds of methods and tools can harness computing power, and what limitations do they face? Those are some of the things that the faculty-turned-students were eager to learn from the four-day workshop “Digital Humanities @ Bowdoin,” co-taught by Chown and Professor of Art History Pamela Fletcher.

- Ann Kibbie – English

- Allison Cooper – Romance Languages

- Anne Goodyear – Museum of Art

- Matthew Klingle – History/ES

- Marilyn Reizbaum – English

While some participants came into the class more comfortable than others with digital methods, all were convinced of the need to know more. “The digital humanities is exciting and folks want to know what’s going on, or they want to dip their toe into it – because they see that colleagues at other institutions are doing projects, they see that agencies tend to fund people who are doing digital humanities projects, or they see that their students are interested in it,” Chown said.

While some participants came into the class more comfortable than others with digital methods, all were convinced of the need to know more. “The digital humanities is exciting and folks want to know what’s going on, or they want to dip their toe into it – because they see that colleagues at other institutions are doing projects, they see that agencies tend to fund people who are doing digital humanities projects, or they see that their students are interested in it,” Chown said.

So many faculty members wanted to sign up for the course that some had to be turned away. “The level of faculty interest is extraordinary,” said Dean for Academic Affairs Cristle Collins Judd. “It not only reflects a strong commitment to the continued development of faculty research and teaching, but also highlights the important opportunities offered by the Digital and Computational Studies Initiative.”

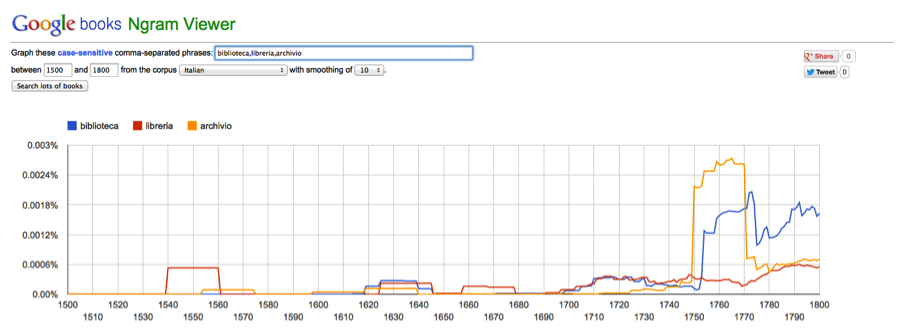

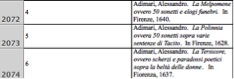

Since its conception in 2012, that initiative has gained impressive momentum. In addition to the steering committee headed by Fletcher and Chown, two full-time faculty have joined the cause: Postdoctoral Fellow in the Humanities Crystal Hall – whose own digital humanities research has led to her book Galileo’s Library, which will be published in February 2014 – and New Media and Data Visualization Specialist Jen Jack Gieseking.

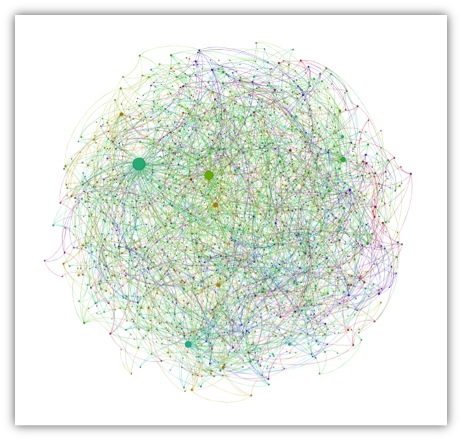

The initiative also boasted the debut of a full-fledged course in fall 2013: “Gateway to the Digital Humanities,” taught by the same team of professors (read about the student course on p. 14-15 of the Fall 2013 Bowdoin Magazine). The course covered four major categories of digital humanities techniques – image analysis, text analysis, spatial analysis, and network analysis – a breakdown inspired by a November 2012 talk at Bowdoin by digital humanities specialist Anne Helmreich.

Fletcher and Chown had to turn down a deluge of requests from faculty members to sit in on the fall course, prompting them to start thinking about developing a January workshop for faculty. After gleaning some ideas from a Northeast Regional Computing Program consortium in Boston, members of the initiative began drafting a program based on the fall course – which, according to both professors and students, was a resounding success.

The first day of the faculty workshop gave an overview of how computers work and what the digital humanities can accomplish, with some image analysis built in (Chown demonstrated, for instance, a basic way of analyzing the color choices in Rembrandt’s “The Night Watch”).

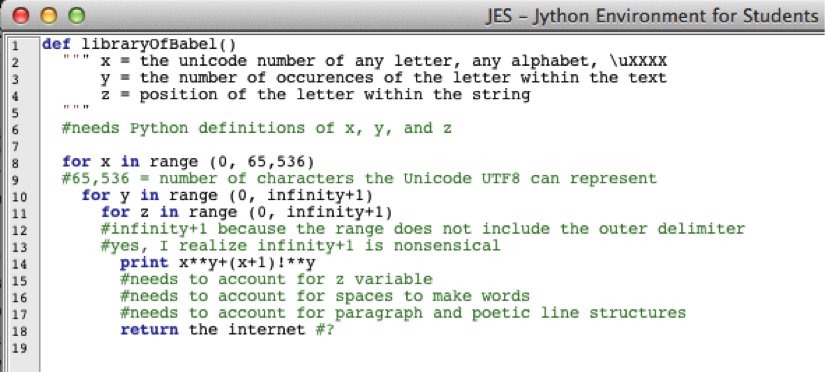

“Programming is about abstracting, and scaling over and over and over, until you’re doing things that look really complicated – but the individual parts of it are very simple,” Chown said. “What makes programming so exciting in the digital humanities is that you can play; you can try things out. You can reverse the colors of a Van Gogh and find out that he was playing with negative space – something I discovered on my own just by playing around.”

The theoretical overviews were followed by hands-on experience: participants embarked on their first lab assignment and discovered just what it means to operate at a fundamental digital level. By typing in code using the programming language Python, they made tiny neon turtles maneuver around to create geometric shapes on their computer screens.

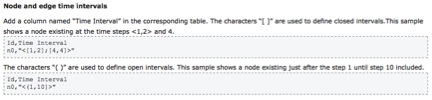

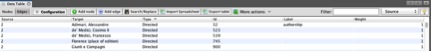

Throughout the rest of the course, participants had an opportunity to hone in on the remaining three categories of analysis. Tuesday covered text analysis – using a tool called Voyant to assess word frequency, for instance – and Wednesday covered spatial analysis, with a special look at GIS projects that participants are already involved in. Today, the final session of the workshop, they’ll cover network analysis, using tools such as Gephi.

The goal of the course is not for everyone to become an expert programmer. It’s about gaining basic fluency in a discipline that’s closely tied to just about every other discipline. “Increasingly, the format for information circulation is digital,” Hall said. “Staying current in any field means at least understanding what’s going on with the digital component – the implications of interface choice, of media choices. It’s incredibly important.”

Just as important as the content covered in the workshop is the opportunity to exchange knowledge and ideas with the instructors and fellow participants. Humanities professors are getting a new perspective on their own fields from computer scientists, and the opposite is also true. “I see the humanities as a great source of ideas,” Chown said. For instance, humanities projects often run up against the limitations of digital tools that aren’t quite suited to the task at hand – providing fertile ground for innovation in computer science.

“The fun thing about this initiative has been gathering up a lot of people from art history, and computer science, and sociology, and earth and oceanographic studies, and all of these other disciplines, and getting them in a room, and having them talk about this stuff,” Chown said. “The ideas that have come out of it have just been phenomenal.”

Why are you taking the Gateway course?

Why are you taking the Gateway course?