Our source code has been available to the public on github since we started a new repository last summer. However, we haven’t had a stable master worthy of releasing to the public until the RoboCup in Graz.

Now we’ve decided to do a real ‘source-release’ with the code we played in the Finals which has relatively stable versions of all the major modules necessary for soccer play. In addition, we’ve added pages of documentation to the motion and vision systems, and are revamping our online documentation at our wiki. Once we finished some more documentation and get a good draft of our Team Report done, we’ll add a tag to our github account, and make an official announcement here.

Our hope is that by setting the example of sharing our code, we can convince other teams to share their code as well, and help out teams who don’t want to develop all their modules on their own. If you’re interested in providing feedback on our documentation in advance of the code release, please take a look at our GettingStarted page, and reply to this post, or email me directly (jstrom bowdoin edu).

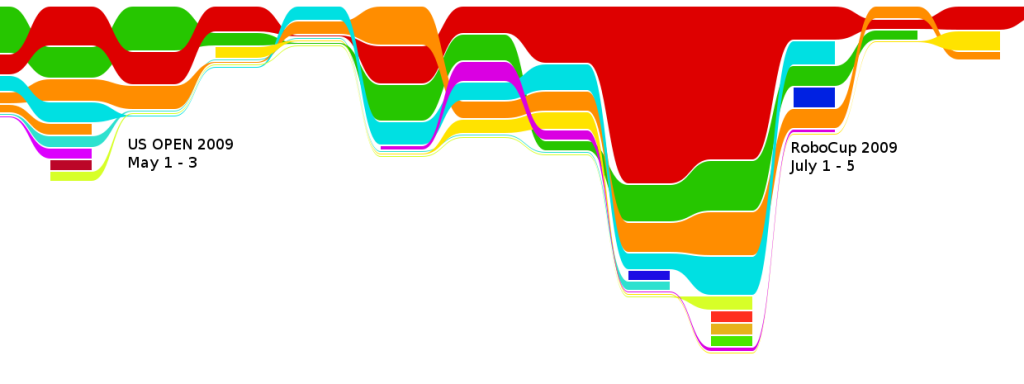

Below is a graph from github.com of our the git commits leading up to RoboCup 2009.

NBites Dev History